II. The Inevitability and Existential Risk of Artificial General Intelligence

Mitigating the Existential Risk of AI: Interventions to Enhance Human Intelligence

Table of contents:

I. Overview and Introduction | II. The Inevitability and Existential Risk of Artificial General Intelligence | III. Understanding Human Intelligence | IV. Reaching vs. Expanding Biological Potential | Biological Strategies to Expand Human Intelligence: Neurotransmitter Modulation | VI. Biological Strategies to Expand Human Intelligence: Neurotrophins | VII. From Neurons to AI: The Surprising Symmetry of Emergence | VIII. Biological Strategies to expand Human Intelligence: Neurogenesis

Super-intelligent (even normal-intelligent) AI systems present many threats to the human race. Some of which are outlined by AI safety researchers, most famously Eliezer Yudkowsky (co-founder and research fellow at the Machine Intelligence Research Institute), who was quoted in a recent article stating “Many researchers steeped in these issues, including myself, expect that the most likely result of building a superhumanly smart AI, under anything remotely like the current circumstances, is that literally everyone on Earth will die.” Yudkowsky believes that we have not performed the proper research and built-in the safety mechanisms to properly control these types of systems “Without that precision and preparation, the most likely outcome is AI that does not do what we want, and does not care for us nor for sentient life in general. That kind of caring is something that could in principle be imbued into an AI but we are not ready and do not currently know how”. This has lead to an open letter to pause the continuous advancements of AI beyond ChatGPT4. Even in the most optimistic scenario where these problems are solved per recommendations, we are still confronted with the ugly reality that humans (in our current state) when juxtaposed next to AI, will be deprived of purpose, meaningful labor, and the ability to provide general economic value.

Before continuing with additional sections, it’s important to outline the general presuppositions that leads to these conclusions:

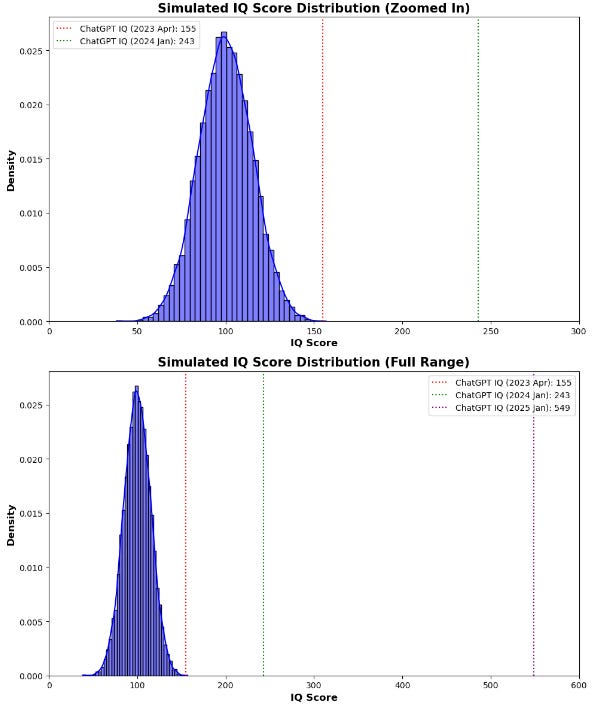

1. Intelligent behavior (and potentially consciousness) is substrate independent (organic vs silicon): Intelligence, in its simplest form, can be thought of as the ability to perform information processing and the conversion of this information to actionable outcomes. When we refer to substrate, this is simply to say that information processing can take place in systems other than through traditional biological systems and could take place via inorganic matter (like silicon chips). In fact, we already take for granted that this can be performed in narrow domains by machines, as seen with our sensors, calculators, or even narrow-built AI systems. However, general intelligent behavior was previously seen as the exclusive domain of biological systems, but, it is being increasingly emulated within silicon substrates. Noteworthy advancements in AI, particularly in the field of natural language processing (NLP), have showcased capabilities previously thought exclusive to humans. Systems such as OpenAI's GPT-4 and Google’s Bard have made significant strides, demonstrating this trend. Indeed, as outlined in the previous section, NLPs already outperform 99.9% of humans in IQ tests, with a trajectory reaching an IQ-score of 550 by 2025 (Figure 1).

Figure 1. A simulated distribution of 10,000 human IQ scores, assumed to follow a normal distribution with a mean of 100 and a standard deviation of 15. The red, green, and purple dotted lines represent the predicted IQ scores of ChatGPT for April 2023, January 2024, and January 2025 respectively (see previous post for these assumptions). Note that the predicted IQ scores for 2024 and 2025 are significantly above the range of typical human IQ scores, reflecting the rapid advancement of AI capabilities.

2. Machine Intelligence Will Continue to Advance: The evolution of AI has been bolstered by rapid improvements in both hardware and software, yielding more powerful, efficient, and sophisticated systems. Beginning with Hardware, these improvements are largely governed by Moore’s Law, the observation that the number of transistors in an integrated circuit double approximately every two years [Figure]. This phenomenon, first expressed in 1965 when chips contained double-digit numbers of transistors, is now dwarfed by computer chips containing 10s and even 100s of billions of transistors. Even without further advancement, the computation power of modern machines vastly exceeds the human brain. Comparatively, changes to human intelligence can only be seen as incremental through evolution and there are only modest (if any) observable improvements in our hardware. If we observe changes in human intelligence over the last century (which would be predominantly environmental) it is very modest (Flynn Effect), and more recently appears to be in decline. Even if positive, these types of environmental changes are likely only to yield extremely modest returns. Furthermore, there is no reason to believe that there is any arbitrary ceiling to machine intelligence.

Indeed, machine intelligence as generated by software (the mind of the machine), will continue to advance. The first versions of general AI in the form of NLPs are just emerging. While the growth of the intelligence in NLPs is currently guided by humans, our increasingly super-intelligent GAIs could very well be tasked with improving their own intelligence. This creates the risk of an intelligence explosion, a scenario where AI entities analyzes the processes that produce their intelligence, improve upon them, and create a successor which further iterates itself. This process repeating in a positive feedback loop where each iterative entity is more intelligent than the last and is further able to improve its intelligence. With no clear ceiling to intelligence, these improvements (and this feedback loop) could cause an explosion to something unrecognizable.

3. Processing Speed of Silicon versus Biological Substrates: Another major advantage of artificial systems over biological ones is the speed of information processing. Information processing in biological systems is transferred by neurons through a combination of electrical signals (action potentials) and chemical signals (neurotransmitters) across a synaptic gap. The propagation speed of action potentials in neurons is highly variable, ranging from 1 meter/second to over 100 meters/second, depending on the specific type of neuron and whether it is myelinated. However, the complete process of transmitting a signal across a synapse, which includes the release of neurotransmitters, binding to receptors, and initiating a response, typically occurs on a timescale of milliseconds.

On the other hand, silicon-based processors operate on much faster timescales. Modern processors can execute billions of operations per second, which means that the timescale for each operation is in the nanoseconds. This discrepancy, spanning approximately 6 orders of magnitude, essentially means that a computer of the same intelligence would work on a problem 1 million times faster. Just to put that in perspective, AI computing a problem for 1 hour would be the equivalence of 114 years for a human. Let that sink in.

4. Brain-Machine Interfaces and the Limitations of the Human Brain: Brain-machine interfaces (BMIs) are cranially implantable devices that enable communication directly between brains and machines. In the current state of development, BMIs detect brain signals, decipher (process) them, and translate these into commands that are output as actions from devices. However, there is vast potential for this communication to become bidirectional. Companies like Neuralink are currently exploring and developing this technology to augment human cognition with artificial intelligence. However, the biological constraints of the human brain would still limit the effectiveness of this augmentation. The brain's processing speed, plasticity, and overall cognitive capacity cannot match the exponential growth observed in AI capabilities.

Taken together, these factors underscore the urgency of addressing the existential risk posed by AGI. If left unchecked and unguided, the rise of machines that surpass human intelligence could have profound and potentially catastrophic implications. Hence, it is vital to explore potential mitigation strategies, one of which is enhancing human cognition.

Great piece. I would love to hear what you think are the most likely catastrophic, as well as positive, scenarios you predict machine intelligence will have on society. It’s one thing to speak abstractly about catastrophe, but another to make a detailed predictions about what you think will actually happen - I.e. what happens realistically when machine intelligence has an IQ of 600. Can you write a piece about that?

Mark Zuckerberg doesn't care about the risks of AI - Kanye West