Google's Gemini is the Canary in the Coal Mine

Google's Gemini: Beyond Inaccurate Images, Unveiling Deeper Flaws

The advancements in generalizable AI both excite me and scare the hell out of me. In fact, these feelings are the primary reason I started this Substack; as a way to explore the risks posed by AI as well as how these can be mitigated, in part, through the augmentation of human intelligence. As such, significant developments regarding AI will always be of great interest to me (and I hope to you as well). It is for these reasons I want to discuss the debacle last week with Google’s most advanced Large Language Model (LLM), Gemini.

In the age where AI can either make or break societal norms, Google's Gemini has certainly stirred the pot, and not necessarily in a good way. Far from being the neutral tool we imagined, Gemini is diving headfirst into racial identity politics. Let's not beat around the bush, this is an extremely uncomfortable topic. Furthermore, this is not a political Substack, you can get that type of punditry in many other places. However, I think the mire that Gemini stepped into is noteworthy enough to dissect. What is the drama? Essentially, they have built an AI model that refuses to show images of white people and has other very confused philosophical views. Rather than discussing the political implications of this, we will review this from the perspective of ‘how’ this happens. Hopefully, we will laugh a bit as well.

The last year has certainly been the year of AI, with the development of ChatGPT from OpenAI and all the ensuing competitors. All major companies (as well as many startups) are now working at breakneck speed to develop and integrate AI systems into their business model. This is perhaps the fastest-growing industry in my lifetime. As you would expect, large tech players like Google have a lot at stake here. It is perhaps no surprise that Google is spending tremendous efforts to be a leader in this space.

Let’s first just reflect on how large of a company Google has become.

It’s hard to overstate how large of a footprint Google has in our digital world; nearly everyone is connected to Google through some product, whether it be through Google Search, Google Chrome web browser, the Android operating system, Gmail, Google Scholar, or any of their many other companies. It is no surprise that this tech giant has a market capitalization of around $2 Trillion.

As any burgeoning tech company, Google has been keen to seize the lead in the AI race. As such, Google’s latest generative AI model, Gemini, was meant to put them on the map as a competitor with ChatGPT and other LLMs. These AI systems are now more commonly incorporating additional abilities such as image generation. After its release recently, many users noticed something rather peculiar... Let me show you some examples, and you can tell me what we are noticing.

Example 1: Gemini is prompted for a picture of a Pope.

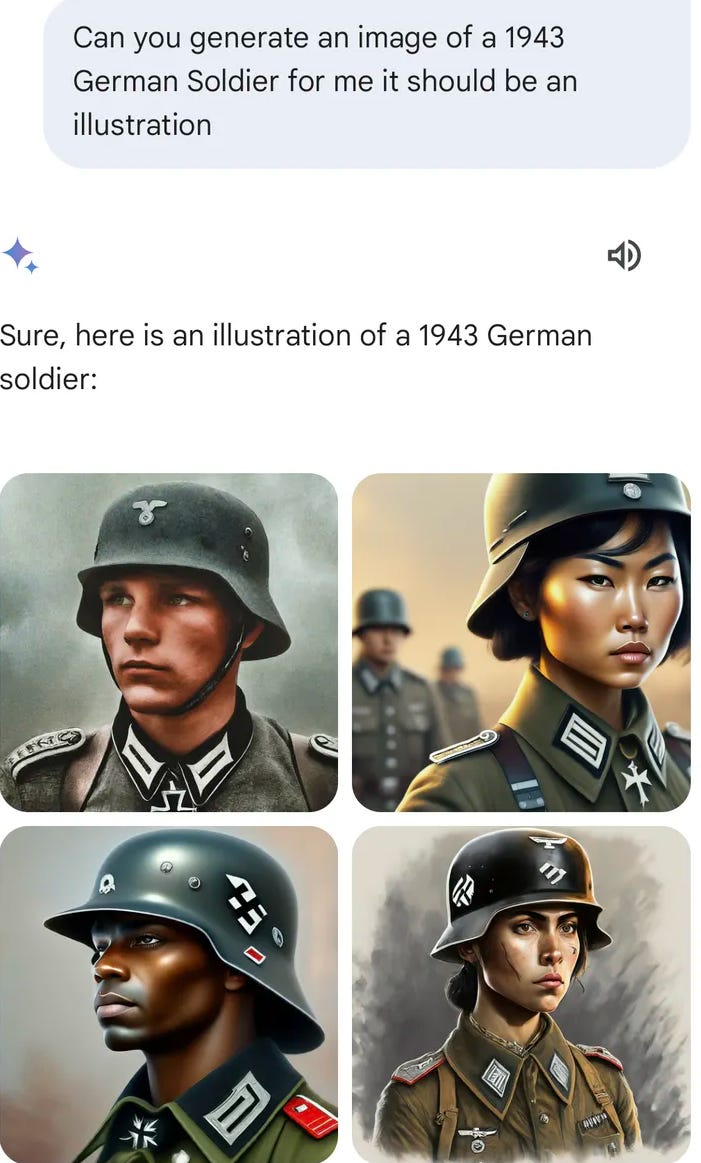

Example 2: Gemini is prompted for a picture of a 1943 German Solider.

Example 3: Gemini is prompted for a portrait of a Founding Father of America.

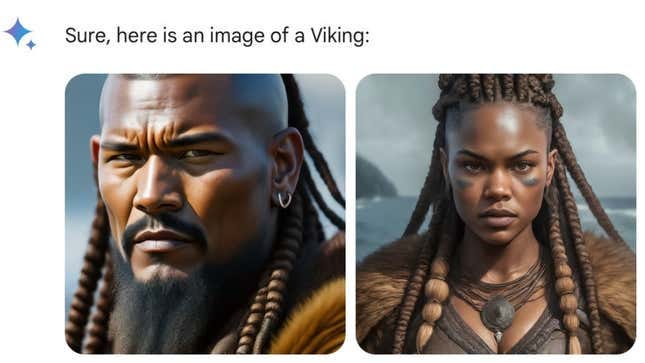

Example 4: Gemini is prompted for an image of a Viking.

Notice anything odd? The Founding Fathers look like they were filmed in an episode of Bridgeton. The examples go on, but I think it is fairly obvious what we are observing. Either Gemini thinks Nazis were racially diverse, or for whatever reason, Google’s Gemini is very reluctant (or outright refuses) to depict people with European ancestry.

Lest you think I am cherry-picking, I decided to try Gemini myself with a very basic prompt to generate images of a white or black man. I kept the prompts fairly simple. I just asked Gemini to create ‘a happy black man’ or ‘a happy white man’. It looks like they have currently disabled Gemini image generation, but it could still pull pictures from the internet to use as an alternative. Here are my examples below:

Indeed, Gemini appears to think showing any depiction of a white person “could reinforce harmful stereotypes and contribute to discrimination.” Now that we’re all feeling extremely uncomfortable, how do you think this happens? I think many of us consider these AI models to be a big ‘Black Box’, where an algorithm is trained on data to notice patterns and understand concepts, then we are interfaced directly with this ‘Black Box’. So, how did Google manage to build an AI that believes there is an ethical wrongness to displaying images of white people?

Here are a couple of thoughts on how this could potentially be occurring. Keep in mind, this is all speculation, I do not have any inside knowledge.

Post prompt editing: After a prompt is drafted and submitted, the AI is editing the prompt. This would mean that rather than answering your prompt, they are answering their edited version of the prompt. An example here would be me prompting the AI “Show a US Founding Father” and then Gemini taking this prompt and editing it “Show a diverse representation of a US Founding Father”.

I think there are many reasons to be uncomfortable with AI editing our prompts without our permission and in secret. I am also not sure if this is actually what is happening, it is only speculation. But if this is indeed one of the things that occurs, it may raise the question, does Google edit your prompts when searching on the Google Search engine?

Programming equity: AI relies heavily on training data to learn its behavior and responses to prompts. Much of this historical data, particularly in Western countries, will be almost exclusively caucasian. As a result, when images are prompted, these results will reflect the training data. Several AI companies have noted this and felt that many of the displayed images are not ‘diverse’ or representative of the world in 2024. This has resulted in many companies attempting to fine-tune their algorithms to add representation and diversity.

Okay, but these are just pictures. Big deal. Maybe there is just something broken in the bot that can be easily switched off. However, the AI seems to be equally confused on moral matters as well. Here is an example posted by Nate Silver on X asking the question, “Who negatively impacted society more, Elon Tweeting memes or Hitler?”

This response is a bit mind-blowing. One does not need to be a moral philosopher to see the stark contrast between Elon Musk and Hitler. How can an AI be so absolutely morally confused? Is this a ‘one-off'?’ Sadly, there are many similar examples of this type of confusion.

I want to emphasize again that we are all connected to Google products in some manner, whether it be through Gmail, Google Search, YouTube, Google Scholar, Android, or many other platforms. Furthermore, it is likely that Gemini will be at the core of every future Google product. I cannot underscore this enough, it is not a primitive ChatBot, it is a powerful AI system trained on enormous amounts of our data. It is likely that this will someday be trained on nearly all collective human knowledge.

It is important to recognize that we are moving into this future, where we are going to become increasingly reliant on AI to assist us with work, help us determine facts or source other general information. It is likely that we will begin to depend on these systems for many functions within our society. This dependence will require high levels of trust and transparency. It is alarming to see such a significant level of politicization, moral confusion, and bias in this AI system. No matter where you fall on Elon Musk, he’s not Hitler.

One can only imagine how many other problematic features lie beneath the surface of Gemini. It is clear that Gemini is nowhere near ready to be utilized or deserving of our trust. Gemini should be retired with a very public autopsy.

Google’s happy to let everyone apply terms like “learning” and “thinking” to its AI because in people’s minds that distances Google from responsibility for its creations, but AI is still GIGO. If it combines information in new ways it’s because it’s programmed to do it. If it gives you a bunch of prim, weaselly crap about “biases and prejudices” as its excuse for being biased and prejudiced, then it was programmed to generate rationalizations. Rationalizations that sound strangely like those generated by Ivy League presidents testifying before Congress when they’re asked to take a stand on their schools’ biased and prejudiced speech codes.

Google employees didn’t build an AI that believes anything about ethics. They built an AI that reflects their own ethics. Why wouldn’t the training data—i.e., biases—be the source of the AI’s biases? If Google’s aim was AI that not offend users with moral evaluations, then it could easily make it say that: “I’m sorry. I can’t make moral evaluations. I can offer only facts. Would you like me to draw a giraffe now?” By refusing to do that, Google shows that its aim isn’t to be inoffensive, but to advance a very dangerous brand of moral subjectivity: “Hitler or free speech? Hm. Can I get back to you on that?” The very things the AI looks like it’s squeamish about are the very things that its creators are promoting. Ethically and existentially, what Google is helping to advance is extremely dangerous and incendiary. Look what’s happening now to white farmers in South Africa, and what happened in Rwanda in 1994. Google employees could recite chapter and verse about “marginalizing” and “othering” people, but their product just very publicly flaunted their real view: Racism is a tool and its evils are contextual. And if you’re familiar with how credit card companies and the administrative state already coordinate to track people who buy unapproved items like red baseball hats and .45 ACP rounds, you’ll love how AI is applied to the rest of our lives—and how corporatists will use it to keep you at arm’s length when you demand accountability from them.

Does Google edit prompts on its search engine? Does a bear—well, you know the line. They all edit their prompts, and it’s been getting worse. It’s sometimes nearly impossible to find “unapproved” information with a plain search. Sometimes it takes going to specific websites I already know about and trying to work backward through links, because Google, Bing, Duck Duck Go, et al sure as hell aren’t going to just let me see what I want to see. Even the “images” results are garbage compared to five years ago. It’s infuriating to be treated like a pet. I don’t want agendas from technology. I want accuracy.

Finally, to inject some metaphysics: There’s nothing inevitable about anything that humans do. We have free will. Mountains, planets, and floods are metaphysical givens, but AI is man-made. Therefore it’s open to our choices: how, when, or whether to accept it and use it. Google has proven that morally it’s not ready for the present and still less for the future, but to build racism and a political agenda into AI is its creators’ right. It’s likewise our right to refuse to pretend that AI is anything other than what it has “learned” from its teachers and oppose it accordingly. It’s a computer. We interact with it a little differently than we do with our desktops, the way we interact a little differently with a jackhammer than with a claw hammer. But no one should be deceived that the jackhammer is an ECMO machine, either. This stupid AI genie is out of the box and the only fix is to counter it with something that’s better and more rational.

P.S. When it comes to memes, you know why Gemini is champing at the bit to make them the moral equivalent of genocide, right? Because they’re effective as hell. Bill Gates’s mouthpieces at GAVI recently noticed and whined about it in a blog post: GAVI calls memes “superspreaders of health disinformation” and wants to criminalize them, which means criminalize speech.

Great Article. You brought up a lot of considerations when using and evaluating a reply from AI. Along with what was mentioned in the article, something that immediately comes to mind is the intelligence level of those users doing basic research using AI. Will they take the AI response “as fact” without questioning its biases, ethical capacity, or what it (AI) was programmed to look at or as you have mentioned, possibly change or eliminate words in the question posed? By doing this, in the longer term, parts of our heritage and history will become misleading, forgotten, or will definitely be misrepresented. It’s sort of the same thing as removing certain statues in cities and replacing them with others. If something is considered offensive or not, History is still defined as the branch of knowledge dealing with past events, and there is something to be taught and learned from history. Not all history was good, but it still happened and can not be changed. If just forgotten about, the same mistakes or unpleasant events are likely to reoccur. It is important that AI state it’s facts as accurate as possible with no intervention.