Fun with OpenAI's Sora!

Short Post

OpenAI just launched its new AI video generation tool, Sora! In this quick post, I’ll share a couple of experiences I had experimenting with it.

But first—what is Sora?

Sora is OpenAI’s latest innovation in AI video generation, designed to transform text prompts into dynamic, visually captivating videos. Powered by advanced machine learning models, Sora enables users to create short clips simply by describing scenes, characters, and actions in natural language. The tool interprets prompts and synthesizes animations to produce high-quality results. Sora has a truly intuitive interface and makes professional-grade video production accessible to a wide range of users—from content creators and marketers to casual experimenters.

Rather than diving into a comprehensive feature review (YouTubers have that covered, and I’ll share links to their videos below), I thought it would be more fun to highlight a few of my own experiments. Hopefully, it inspires you to try Sora for yourself and unleash your creativity!

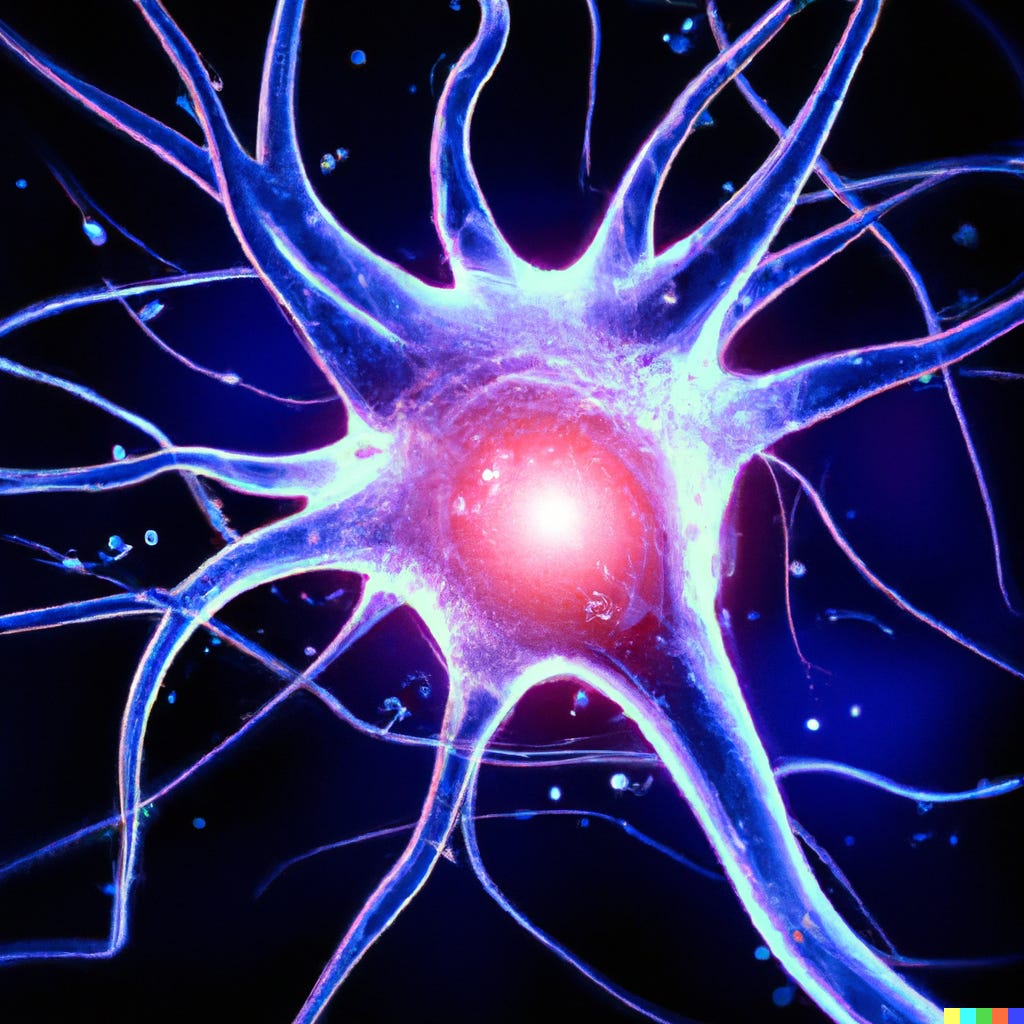

I am not an expert with Sora, but from what I see, it offers two creative paths: you can either upload an image or object as a starting point and request a video to be generated around it, or you can craft a video entirely from scratch based purely on your prompt. I decided to start with something simple and meaningful—the Neural Nexus Substack logo.

This logo was originally created using DALLE-3 early last year. My goal was to represent a neuron in the midst of firing, a design that felt perfectly aligned with the theme of Neural NeXus. With that inspiration in mind, I uploaded the logo to Sora and let the tool work its magic. Below are a few of the videos I generated using this image as a starting point.

Sora Video 1:

Sora Video 2:

Next, I wanted to generate a complete video from scratch. My idea was to attempt to create a Woolly Mammoth made from scrap yard components.

Prompt: A large, fictional Mammoth made entirely of intricate mechanical parts lumbers through a sprawling junkyard. Its body is a patchwork of metal plates, gears, and wires, with glowing eyes and steam puffing from its trunk. The junkyard is a chaotic landscape of discarded machinery, rusted vehicles, and towering piles of scrap metal. The mechanical elephant moves with surprising grace, its joints whirring and clanking softly as it explores its environment under the grey, overcast sky.

Pretty neat, right? Although, as a friend pointed out, the movement is peculiar. The Mammoth appears to pick up the frontmost foot to step forward rather than the back foot.

A couple more for fun.,

Prompt: A couple in their early 30s salsa dancing.

Again, you can see some awkwardness in the movement. There seems to be some broken physics and an issue with object permanence.

Prompt: A thylacine opening its mouth wide.

A fun and beautiful video, but not quite what I expect for a Thylacine.

Prompt: A bull running toward a matador

Sometime in the near future, a single person will be able to generate an entire feature film from their computer in the matter of a few minutes. My overall impression is that we’re 80-90% of the way to having truly compelling AI-generated video capabilities at our fingertips. While many videos still show clear signs of being AI-generated—due to small abnormalities—there are already quite a few examples that, to me, are indistinguishable from reality. The question is: how much more effort will it take to close that final gap and eliminate these uncanny imperfections?

Perhaps the better question is: once we achieve lifelike video generation, how will we know what’s real on the internet? Will we ever be able to trust digital content again? We are rapidly heading toward a future where distinguishing between the real and the fabricated digital content is becoming impossible—it raises unsettling questions about truth, trust, manipulation, and reality in the digital age.

Looks like the knowledge of thylacines didn't get programmed into the AI.

My question as soon as I started reading was, "So when can I make the movie of my novel?" "In the near future" sounds pretty good. Several Instagram AI accounts I follow have started putting up short video clips in addition to their still images. A lot of the stills are incredibly lifelike, but the animation needs some work. AI seems to have a lot of trouble with human hands, especially, and often renders extra digits in both still and video images. One of the IG accounts uses a recurring character, and he'll post a series of 15 or so images of the character in a specific scenario. A lot of times the AI has trouble consistently getting the character's features right. About half the images are accurate for the character, but the rest are jarring to see because to a human it's immediately obvious that while they're very similar, they aren't the same person. Well, pretend person.

My hope is that as the technology advances the ability to detect it will advance, too. Computer technology is so far over my head that I can't even imagine how to approach the problem; the idea of reverse engineering probably isn't right, but I count on the ingenuity and anarchic tendencies of computer geeks to figure it out.

Fascinating/scary.

Do you think it'll ever get to being able to render in real-time with interactivity? I'm thinking of games here. I don't want that to ever replace curated artist-driven experiences, but as a concept it would be fascinating to see and try out.