AI Enables Mind-Reading Technology

Brain signals recorded by BMIs can be distilled to sentences and pictures with AI.

Foreword:

Growing up, the X-Men were more than just my favorite superheroes; they were icons that sparked my imagination. These characters, distinguished by their superhuman abilities resulting from genetic mutations, were famously known as 'mutants.' Among them stood Charles Xavier – Professor X – a leader as enigmatic as he was powerful.

In the comic, Professor X's signature ability was telepathy – the power to delve into the minds of friends (and enemies) to read and transmit thoughts. In essence, he perceived the distinct brain waves of others within a small radius around him. While this power wasn't as flashy as the abilities of many other characters, it appeared almost too implausible, even for the world of comics. However, reflecting on this now, it ironically seems the most feasible. Why can’t the human mind achieve such a feat? Or at least engineer a device to do so? After all, thoughts are just an intricate series of electrical signals traversing through biological neural networks.

Indeed, the boundary between science fiction and reality is becoming increasingly blurred. The relentless advancement of medical technology, coupled with the rapid evolution of AI, is transforming once fanciful dreams into tangible realities. The capacity to communicate and connect, to understand beyond mere words, is shifting from the fantastical narratives of comic books to the realm of the possible – all driven by the relentless and unseen force of innovation.

Overview: Mind-Reading with AI

In the rapidly evolving landscape of neuroscience and artificial intelligence, the development of mind-reading technology stands as a significant milestone. Moving beyond the theoretical, this technology now demonstrates tangible capabilities in interpreting and reconstructing human thoughts based on brain activity. Recent advancements in brain-computer interfaces (BCIs) and sophisticated AI algorithms have led to breakthroughs in both reading and decoding neural patterns associated with speech, visual experiences, and even complex thought processes. This post examines these developments, providing a comprehensive overview of the current state of mind-reading technological advancements and both the potential implications and challenges of this emerging field.

Introduction: Deciphering the Brain's Information Processing

What transpires within our brains during a cascade of thoughts? How do these complex biological networks construct our individual perceptions of reality? These fundamental questions have long captivated psychologists and neuroscientists, leading to extensive research and the use of diverse analytical tools to study brain functionality in various contexts. But what exactly are these tools, and what aspects of brain activity do they help us understand?

Neurons are at the heart of brain functionality, acting as fundamental units that communicate through electrical signals. These signals play a crucial role in encoding and storing our thoughts, ideas, memories, and in the overall processing of information. Equally vital is the brain's blood flow and oxygenation, indicators of active brain regions and necessary for meeting its high metabolic demands. To delve into these complex processes, researchers employ brain-machine interfaces (BMIs) like functional Magnetic Resonance Imaging (fMRI) and electroencephalogram (EEG) (Figure 1). fMRI precisely tracks blood-oxygen levels to identify active brain areas during different tasks, while electrodes measure electrical activity directly from the brain. Using these tools, scientists can map and associate specific brain regions with various cognitive functions and processes.

While advanced tools like fMRI and electrodes provide us with invaluable data, interpreting this information is not straightforward. The data is often complex and noisy, requiring sophisticated processing to derive meaningful insights. This challenge leads us to a pivotal question: How can we translate these intricate brain signals into coherent thoughts? Machine learning emerges as a key solution in this context. By analyzing complex neural data with advanced algorithms, we can start to identify patterns that correlate brain activity with specific thoughts and experiences. This approach is the foundation of recent breakthroughs in mind-reading technology, which we will explore in-depth, showcasing how researchers are beginning to unravel the complex language of the brain.

Claims: AI's Capability in Reading Human Minds

The capability to read human thought is beginning to flourish at the intersection of artificial intelligence and neuroscience. This introduces a series of groundbreaking claims, underscoring its emerging capabilities and vast potential:

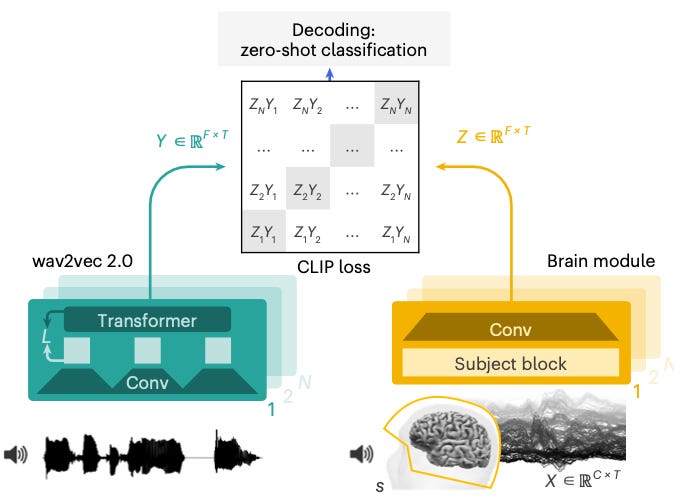

Speech and Language Decoding with EEG: A pivotal claim for mind reading is its ability to decode speech and language from brain activity. This involves interpreting neural signals associated with speech and converting them into understandable language. This has now been famously demonstrated with two different non-invasive BMI technologies, fMRI and EEG (Tang et al, 2023; Défossez et al, 2023). We will first discuss EEG and the strategies used herein. The model approach aimed at decoding speech from brain activity in healthy participants, as depicted in Figure 2. The activity was recorded using MEG or EEG while participants listened to stories or sentences. The model employs a trained 'speech module' to extract deep contextual representations from 3-second speech signals. It then learned to align these representations with the corresponding brain activity over a 3-second window. The brain activity representation, denoted as Z, is generated by a deep convolutional network. During evaluation, the model predicts audio snippets from left-out sentences, calculating the probability of each 3-second speech segment based on each brain representation. This decoding method is 'zero shot', meaning the audio predicted by the model doesn't need to be present in the training set, making this approach more general than standard classification methods.

Speech and Language Decoding with fMRI

In this study, researchers recorded BOLD fMRI responses from subjects as they listened to 16 hours of narrative stories (Tang et al, 2023). They developed an encoding model for each subject that predicted brain responses based on the semantic features of the words in the stories (Figure 3). When reconstructing language from brain activity, the process involved:

Maintaining Candidate Word Sequences: As subjects listened to new words, the decoder kept track of potential word sequences.

Language Model Proposals and Encoding Model Scoring: A language model proposed continuations for each sequence, while the encoding model assessed the likelihood of these continuations based on the recorded brain responses. The most likely continuations were then retained.

Evaluation of the Decoder: The decoder's effectiveness was tested on brain responses recorded while subjects listened to new stories not used in training. For one subject, segments from four test stories were analyzed alongside the decoder's predictions. The decoder was able to accurately reproduce some words and phrases and capture the general meaning of many others.

This process illustrates the decoder's ability to not only replicate specific words and phrases from brain activity but also to grasp the broader context or gist of the narrative.

Visual Experience Reconstruction: Another significant claim in this domain is the recent advances in AI generative models that enable decoding visual perceptions directly from brain activity. Researchers have now shown that a cutting-edge technique called latent diffusion modeling can reconstruct images seen by subjects based solely on their fMRI brain scans (Takagi et al, 2023). Without needing any model training, the method uses a pre-trained neural network to generate photo-realistic images that match what participants viewed, reaching accuracies over 80%. Additionally, the study provides an interpretable mapping between model components and brain computations in visual areas. This demonstrates how the iterative generation process in diffusion models can relate to hierarchical biological processing. By leveraging such ready-made generative AI systems, ‘brain reading’ applications could scale more feasibly. The work represents an important step toward trainable systems that can decode and reconstruct intricate mental images and scenes. (Figure 4).

Each of these claims marks a significant step in bridging the gap between technology and the human mind, showcasing the vast potential of AI in understanding and interpreting the complexities of human cognition. However, these claims also necessitate a rigorous examination of their scientific validity and practical feasibility, which will be explored in the subsequent sections.

Impacts: The Ripple Effect of Mind-Reading Tech

There are now several major tech companies including META and several startups working on commercializing this technology. The groundbreaking claims of mind-reading capabilities may bring a range of impacts, both transformative and challenging, across various sectors of society:

Interesting and exciting applications:

Medical Breakthroughs: One of the most profound impacts is in the realm of healthcare. Mind-reading AI has the potential to revolutionize treatments for neurological disorders, aid in rehabilitation, and offer communication solutions for those with speech and motor impairments. It could lead to better diagnostic tools, more personalized therapies, and enhanced patient care.

Enhanced Communication: For individuals with severe speech and motor limitations, this technology could provide an alternative communication method, allowing them to express their thoughts and needs more effectively. It opens up new channels for interaction, breaking barriers that once seemed insurmountable.

Research Advancements: In academic circles, mind-reading AI could significantly advance our understanding of the brain, offering insights into cognitive processes, memory formation, and even the nature of consciousness. This knowledge could spur further innovations in both AI and neuroscience.

Recording a dream: With the ability to record brain activity, we may be able to create real-time recordings of individuals’ subjective experiences. This would further potentially extend to even recording what we think about in our sleep. How interesting might it be to watch a movie of our dreams - Nathan Slake.

Sending signals as well as receiving: If we can read signals within a brain, then perhaps we can potentially send them to a brain as well. This would mean we could potentially write experiences into a brain, perhaps even the capability to experience virtual reality (VR) without a headset.

Truly scary - a nightmare for privacy: As AI begins to tap into the inner workings of the human mind, it raises serious questions about privacy and consent. The ability to decode thoughts and emotions could lead to potential misuse or ethical dilemmas, particularly regarding how this information is used and who has access to it. It is clear that many tech companies will have these capabilities because of BMIs in their hardware.

The book-turned-movie The Minority Report is centered around the concept of a society where the police are capable of reading minds and predicting crime before it happens. Even in a less Orwellian scenario, the prospect of AI systems that can decode private thoughts and emotions raises profound ethical and privacy issues. Technology continues to invade our personal rights and liberties.

The impacts of mind-reading AI extend far beyond the realms of technology and healthcare, touching on ethical, legal, and social dimensions.

Skeptical Analysis: Evaluating the State of Mind-Reading AI

While the potential of mind-reading AI is immense, I think there is still a long way to go before this sees much real-world application. Let’s consider a few of these hurdles:

Technological Limitations: Despite the advances, current AI and BMI technologies have limitations in accurately interpreting complex neural data. The human brain's intricate and dynamic nature poses significant challenges, and there is a risk of oversimplification or misinterpretation of nuanced brain signals. This technological gap underscores the need for cautious optimism in evaluating AI's ability to read minds accurately.

Replicability and Generalizability: Many of the breakthroughs in mind-reading AI come from tightly controlled experimental conditions with small sample sizes. The replicability of these results in more varied and naturalistic settings remains to be seen. Furthermore, the generalizability of these findings across diverse populations, with varying neural patterns and conditions, is not yet fully established.

Neuroscientific Uncertainties: Our current understanding of the brain and consciousness is still evolving. There are gaps in our knowledge about how certain cognitive processes and experiences are represented neurally. Mind-reading AI, built on this incomplete understanding, may not yet fully capture the essence of human thought and cognition.

In summary, while the advancements in mind-reading AI are undoubtedly groundbreaking, they are accompanied by significant scientific and technological challenges.

Conclusions and Next Steps

Mind-reading technology, with its remarkable capabilities, stands at the forefront of a new frontier in technology and neuroscience. The next steps involve:

Hardware advancements: There are clear limitations on the temporal and spatial resolution of existing BMIs. Enhancing these signals will certainly improve the predicted outcome.

Software advancements: Continued research to refine AI algorithms for more accurate prediction of thoughts.

Establishing Ethical Frameworks: Developing guidelines to safeguard individual privacy and consent in the use of mind-reading AI.

Cross-Disciplinary Collaboration: Collaboration between technologists, neuroscientists, ethicists, and policymakers to address the multifaceted implications of this technology.

In conclusion, mind-reading technology represents a groundbreaking stride in understanding the human brain. This technology will inevitably arrive, and it will do so sooner than we are ready. It is important to begin having discussions and educating the public, particularly due to the intrusive and dangerous nature of such technology.

Support:

These newsletters take a significant amount of effort to put together and are totally for the benefit of the reader. If you find these explorations valuable, there are multiple ways to show your support:

Engage: Like or comment on posts to join the conversation.

Subscribe: Never miss an update by subscribing to the Substack.

Share: Help spread the word by sharing posts with friends directly or on social media.

References

Benchetrit, Y., Banville, H. and King, J.R., 2023. Brain decoding: toward real-time reconstruction of visual perception. arXiv preprint arXiv:2310.19812.

Défossez, A., Caucheteux, C., Rapin, J., Kabeli, O. and King, J.R., 2023. Decoding speech perception from non-invasive brain recordings. Nature Machine Intelligence, pp.1-11.

Kawala-Sterniuk, A., Browarska, N., Al-Bakri, A., Pelc, M., Zygarlicki, J., Sidikova, M., Martinek, R. and Gorzelanczyk, E.J., 2021. Summary of over fifty years with brain-computer interfaces—a review. Brain Sciences, 11(1), p.43.

Takagi, Y. and Nishimoto, S., 2023. High-resolution image reconstruction with latent diffusion models from human brain activity. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 14453-14463).

Tang, J., LeBel, A., Jain, S. and Huth, A.G., 2023. Semantic reconstruction of continuous language from non-invasive brain recordings. Nature Neuroscience, pp.1-9.

I'm cool with this as long as the government doesn't use it!

This was a very challenging read, especially the description of the methodologies used to extract actual images. The ethical implications are huge, and I bet security agencies of all kinds are salivating in expectation of a breakthrough. And I would not be surprised if the DoD also supported this research with a hefty grant. Might this be true already?